Introduction to Project Aria Docs

This page is based on technical writing I did for Project Aria. For the most up to date documentation go to Project Aria Docs.

Project Aria is a research platform designed to accelerate egocentric (from a human perspective) AI research. Project Aria data, tooling and resources helps advance research around the world in areas such as computer vision, robotics, and contextual AI.

Many of the world’s leading research communities will need to collectively work together to solve the significant challenges in these fields. To support these efforts, Project Aria provides Open Science Initiatives (OSI) and the Aria Research Kit (ARK).

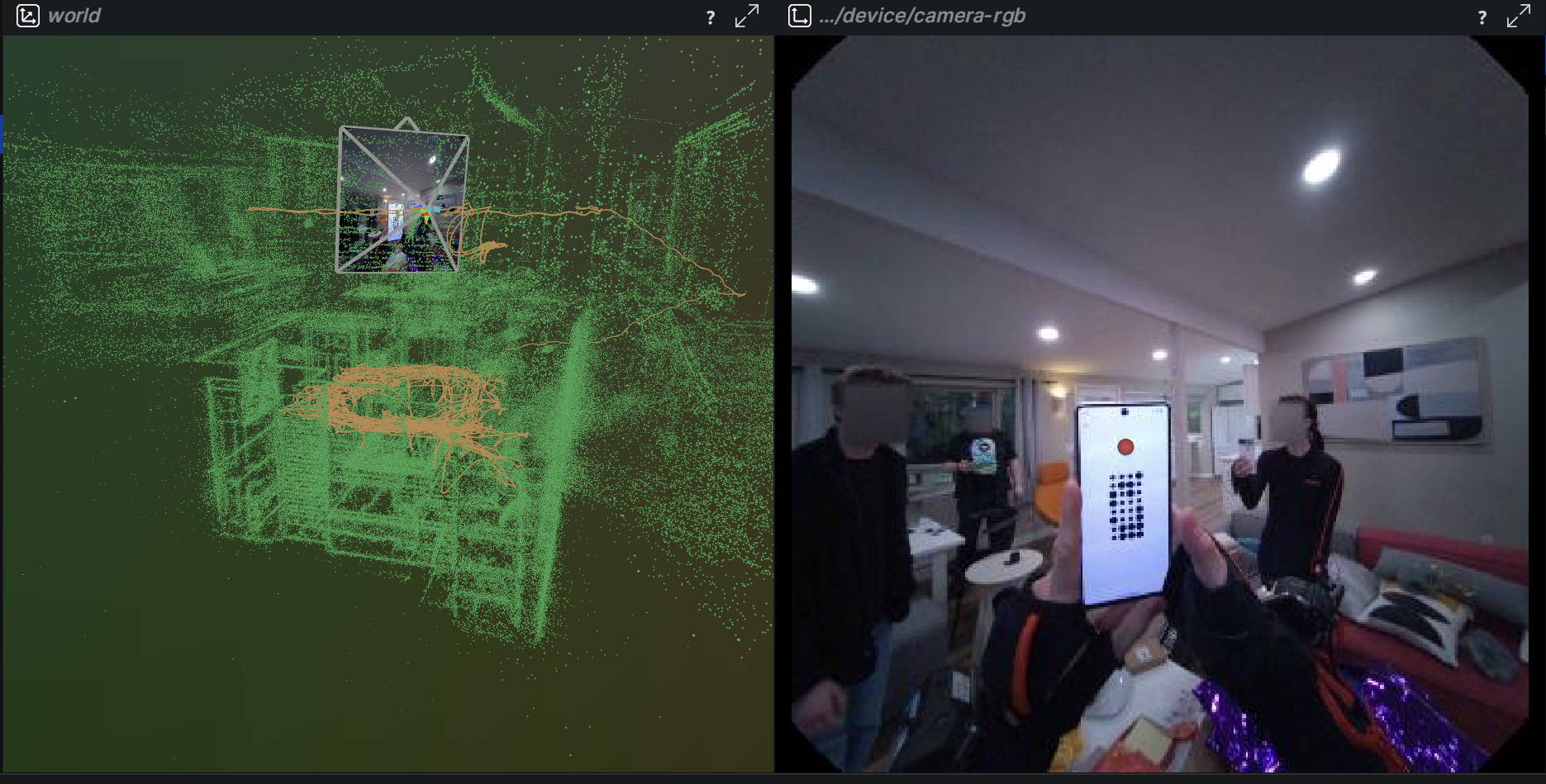

Figure 1: Visualization from the Nymeria open dataset

Figure 1: Visualization from the Nymeria open dataset

New to Project Aria?

- Go to projectaria.com to get an overview of the program

- Go to Aria Dataset Explorer to search and preview some of Aria’s open data

- Go to the Project Aria FAQ for an overview of our current service offerings, capabilities and software ecosystem

Want to get involved with Open Science Initiatives (OSI)?

We believe that open source accelerates the pace of innovation in the world. We’re excited to share our code, models and data.

Open datasets powered by Project Aria data include:

- Aria Everyday Activities (AEA) - a re-release of Aria’s first Pilot Dataset, updated with new tooling and location data, to accelerate the state of machine perception and AI.

- Aria Digital Twin (ADT) - a real-world dataset, with hyper-accurate digital counterpart & comprehensive ground-truth annotation

- Aria Synthetic Environments (ASE) - a procedurally-generated synthetic Aria dataset for large-scale ML research

- HOT3D - a new benchmark dataset for vision-based understanding of 3D hand-object interactions

- Nymeria - a large-scale multimodal egocentric dataset for full-body motion understanding

Research challenges, using our open datasets, are posted to projectaria.com.

Models created using Aria data include:

- EgoBlur - an open source AI model from Meta to preserve privacy by detecting and blurring PII from images. Designed to work with egocentric data (such as Aria data) and non-egocentric data.

- Project Aria Eye Tracking - an open source inference code for the Pre March 2024 Eye Gaze Model used by Machine Perception Services (MPS)

Aria data is recorded using VRS, an open source file format. Our open source code, Project Aria Tools, provides a C++ and Python interface that helps people incorporate VRS data into a wide range of downstream applications.

Interested in getting access to the Aria Research Kit (ARK)?

Through our OSI data, tooling and research challenges we aim to support the broadest audience of the research community. For researchers who also need access to a physical Project Aria device, we offer the Aria Research Kit (ARK).

Project Aria devices can be used to:

- Collect data

- In addition to capturing data from a single device, TICSync can be used to capture time-synchronized data from multiple Aria devices in a shared world location

- Cloud based Machine Perception Services (MPS) are available to generate SLAM, Multi-SLAM, Eye Gaze and Hand Tracking derived data outputs. Partner data is only used to serve MPS requests. Partner data is not available to Meta researchers or Meta’s affiliates.

- Stream data

- Use the Project Aria Client SDK to stream and subscribe to data on your local machine

Before applying for the ARK, please explore our OSI offerings and FAQ. Once you are confident that the ARK is a good match for your research, please apply for the Aria Research Kit.

Our team will review your application and reach out to you with next steps if you are approved for the ARK.

Just received Project Aria glasses?

- About ARK provides an overview of different ways you can use the device

- The Getting Started With Project Aria Glasses covers how to set up your glasses

- The Glasses User Manual provides a range of information, including how to factory reset your glasses

If you encounter any issues, go to our Support page for multiple ways to get in touch!