MPS Output - Eye Gaze Data

This page is based on technical writing I did for Project Aria. For the most up to date documentation go to Project Aria Docs.

Eye Gaze Data Format

Project Aria's Machine Perception Services (MPS) uses Aria's Eye Tracking (ET) camera images to estimate the direction the user is looking. This eye gaze estimation is in Central Pupil Frame. Eye Gaze outputs may be part of Open Dataset Releases or Project Aria Partners can request MPS services on their own data.

Eye Gaze outputs are available for all recordings made with Eye Tracking cameras enabled. Partner data is not made available to Meta researchers or Meta’s affiliates. Go to MPS Data Lifecycle for more details about how partner data is processed and stored.

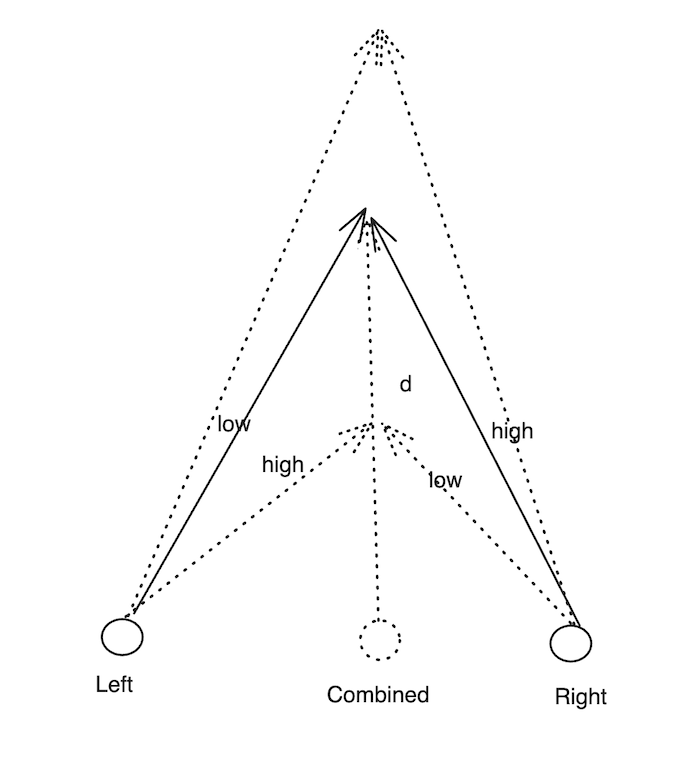

In March 2024, we updated our eye gaze model to support depth estimation. We do this by providing left and right eye gaze directions (yaw values) along with the depth at which these gaze directions intersect (translation values).

Figure 1: Diagram of the March 2024 Model, showing vergence Left, Right and Combined Eye Gaze Directions.

In the new model, the convergence points and distances are derived from the predicted gaze directions. The combined direction’s yaw is used to populate the yaw field of the EyeGaze object for backwards compatibility. The pitch is common to left, right and combined gaze directions.

Eye Gaze MPS file outputs are:

summary.json- high level report on MPS eye gaze generationgeneral_eye_gaze.csv- based on the standard eye gaze configurationpersonalized_eye_gaze.csv- only if you’ve made the recording with in-session Eye Gaze Calibration

Further resources

- Visualize Data Using Python

- Visualize Data Using C++

- Eye Gaze Code Snippets, includes:

- Data loading

- Vector conversion (yaw pitch to 3 vector)

- Coordinates System (CPF_To_Device)

general_eye_gaze.csv

general_eye_gaze.csv outputs are available for all recordings made with Eye Tracking cameras.

At this time, all Open Dataset Eye Gaze data was computed using the older model. Aria Digital Twin (ADT) used their ground truth system to compute eye gaze depth for their release.

Where a cell shows 0, the data has not been provided for that model, but to ensure backwards compatibility it will be represented as 0 in Project Aria Tools readers.

| Column | Type | Pre March 2024 Model | New Model |

|---|---|---|---|

tracking_timestamp_us | int | The timestamp, in microseconds, of the eye tracking camera frame in device time domain. The MPS location output also contains pose estimations in the same time domain and these timestamps can be directly used to infer the device pose from the MPS location output. | Same. |

yaw_rads_cpf | float | Eye gaze yaw angle in radians in CPF frame. The yaw angle is the angle between the projection of the eye gaze vector (originating at CPF) on XZ plane and the Z axis in the CPF frame. | 0 (0 represents not provided), however this value can be computed using the Project Aria Open Source Eye Tracking Tool and is automatically read when parsed with our data utilities. |

pitch_rads_cpf | float | Eye gaze pitch angle in radians in CPF frame. The pitch angle is the angle between the projection of the eye gaze vector (originating at CPF) on YZ plane and the Z axis in the CPF frame. | This now corresponds to the common pitch of the left and right gaze direction. |

depth_m | float | 0 | Absolute depth in meters of the 3D gaze point in CPF frame. Depth values are capped at 4m. If there are timestamps where the predicted values are degenerate, this cell is empty. |

yaw_low_rads_cpf | float | Lower bound of the confidence interval for the yaw estimation. | 0 |

pitch_low_rads_cpf | float | Lower bound of the confidence interval for the pitch estimation. | 0 |

yaw_high_rads_cpf | float | Upper bound of the confidence interval for the yaw estimation. | 0 |

pitch_high_rads_cpf | float | Upper bound of the confidence interval for the pitch estimation. | 0 |

left_yaw_rads_cpf | float | 0 | Left eye gaze yaw angle in radians in the CPF frame. The left yaw angle is the angle between the projection of the left eye gaze vector (originating at CPF) on the XZ plane and the Z axis in the CPF frame. |

right_yaw_rads_cpf | float | 0 | Right eye gaze yaw angle in radians in the CPF frame. The right yaw angle is the angle between the projection of the left eye gaze vector (originating at CPF) on the XZ plane and the Z axis in the CPF frame. |

left_yaw_low_rads_cpf | float | 0 | Lower bound of left eye gaze yaw angle in radians in CPF frame. |

right_yaw_low_rads_cpf | float | 0 | Lower bound of the right eye gaze yaw angle in radians in the CPF frame. |

left_yaw_high_rads_cpf | float | 0 | Upper bound of the left eye gaze yaw angle in radians in the CPF frame. |

right_yaw_high_rads_cpf | float | 0 | Upper bound of the right eye gaze yaw angle in radians in the CPF frame. |

tx_left_eye_cpf | float | 0 | Translation along the X direction from CPF origin to left eye position. |

ty_left_eye_cpf | float | 0 | Translation along the Y direction from CPF origin to left eye position. |

tz_left_eye_cpf | float | 0 | Translation along the Z direction from CPF origin to left eye position. |

tx_right_eye_cpf | float | 0 | Translation along the X direction from CPF origin to right eye position. |

ty_right_eye_cpf | float | 0 | Translation along the Y direction from CPF origin to right eye position. |

tz_right_eye_cpf | float | 0 | Translation along the Z direction from CPF origin to right eye position. |

session_uid | string | Unique identifier for a session within the VRS file | Same. |

personalized_eye_gaze.csv

personalized_eye_gaze.csv outputs are only generated if the recording has in-session Eye Gaze Calibration data. The schema is exactly the same as general_eye_gaze.csv. The session_uids between general and personalized output will be the same.

The in-session calibration is used to compute user specific calibration (gaze correction parameters). The yaw and pitch values will be adjusted based on this calibration. If the instructions for in-session calibration are followed correctly, the calibrated eye gaze is expected to be more accurate compared to general eye gaze.

Personalized calibration parameters, pre March 2024 model

Four coefficients are used to generate the output [s_y, s_p, o_y, o_p]:

- corrected_yaw = s_y * yaw + o_y

- corrected_pitch = s_p * pitch + o_p

Personalized calibration parameters, new model

Six coefficients are used in the new model output [s_ly, s_ry, s_p, o_ly, o_ry, o_p]:

- corrected_left_yaw = s_ly * left_yaw + o_ly

- corrected_right_yaw = s_ry * right_yaw + o_ry

- corrected_pitch = s_p * pitch + o_p

We also use a diopter delta parameter that corrects the depth:

- corrected_depth = 1 / ((1 / depth) - diopter_delta)

The diopter delta is calculated during calibration (see Stage 2 below) is the median of difference between 1 / predicted_depth and 1 / groundtruth_depth values.

General Principles

The following principles apply to general_eye_gaze.csv and personalized_eye_gaze.csv

Confidence Intervals

The confidence intervals represent the models uncertainty estimation. A smaller interval represents higher confidence and a wider interval represents lower confidence. The confidence interval angles are in radians and in CPF frame. Some common factors that impact uncertainty include:

- Blinking

- Hair occluding the eye tracking cameras

- Re-adjusting glasses or taking them off to clean them

For utility function to load the eye gaze in Python and C++, please check the code examples

Session_uid

When there are multiple users in the same vrs file (users handing off glasses to a different user without stopping the recording), session_uid identifies intervals corresponding to different calibration sessions if in-app calibration is performed during the hand-offs.

- All the rows with the same session_uid belong to the same session within the VRS file

- If there are multiple calibration sessions, the session_uid would be unique for each session

general_eye_gaze.csv

- There will be a single value when there is no in-session eye calibration or only one in-session calibration

- The session_uid column values will always match those in

personalized_eye_gaze.csv

Examples

- No calibrated eye gaze - general_eye_gaze will have one session_uid across all rows

- One in-session calibration - general_eye_gaze will have one session_uid across all rows and this value will be identical in personalized_eye_gaze

- k > 1 in-session calibrations - both general and calibrated eye gaze will have k unique session_uid that start when in-session calibration begins and this value will be identical in personalized_eye_gaze

summary.json

The summary.json file provides a high level overview of the output for each of the major stages. This is similar to the operator summary output from the MPS location pipeline.

For each stage of the ET pipeline, there will be one section in this file. If the section is missing, that means that the stage is not applicable or was not run.

Stage 1: GazeInference (all recordings)

Uncalibrated Eye Gaze derived data has been generated. If you’re able to download the data to view the .json file it will say SUCCESS.

| Name | Type | Description |

|---|---|---|

| status | string | SUCCESS (if you are able to download the data and view this file) |

| message | string | Any further details, if available |

Stage 2: InSessionCalibration (if in-session calibration available)

If the recording contains one or more valid in-session calibration intervals, the ET pipeline will compute the calibration parameters.

Each calibration session found in the VRS file will generate the following information:

| Name | Type | Description |

|---|---|---|

| status | string | SUCCESS / FAIL |

| message | string | Any further details, if available |

| session_uid | string | Unique ID representing the session |

| start_time_us | int | When the first wearer starts using the Aria glasses, or when subsequent wearer begins in-session calibration (2nd eye calibration onwards) |

| end_time_us | int | When a wearer session or recording ends |

| params | Array[float] | The calibration parameters (4 floats) |

The status should be SUCCESS, unless there was an issue where the wearer began the in-session calibration, but did not generate the necessary data. In this case it would FAIL.

Stage 3: CalibrationCorrection

If Stage 2 has been successful, CalibrationCorrection will contain details about calibrated eye gaze. For each calibration session, we will output the following information:

| Name | Type | Description |

|---|---|---|

| status | string | SUCCESS / FAIL |

| message | string | Any further details, if available |

| session_uid | string | Unique id representing the session |

| generalized_gaze_error_rads | dict | General gaze error in radians |

| calibrated_gaze_error_rads | dict | Calibrated gaze error in radians |

If the previous stages completed successfully, the status for this stage should always be SUCCESS.

Example summary.json files

Scenario 1: No calibration available

This report is quite short, as no in-session calibration data is available. Eye Gaze MPS was successfully created:

{

"GazeInference": {

"status": "SUCCESS"

}

}

Scenario 2: In-session calibration available

In this example, there were multiple calibration sessions:

- In session one calibration was completed successfully

- In session two, the user began the in-session calibration, but did not generate the necessary data.

{

"GazeInference": {

"status": "SUCCESS"

},

"InSessionCalibration": [

{

"Status": "SUCCESS",

"session_uid": "01ac9bf2-334a-49c6-9dc6-fdc07ab08a2a",

"message": "",

"start_time_us": 147588973,

"end_time_us": 208304973,

"num_calibu_frames": 1000,

"parameters":[1.02361481, 1.05426864, 0.01158671, 0.01403982]

},

{

"Status": "FAIL",

"message": "Couldn't compute GT gaze vectors for the interval [487241235, 508304973]",

"session_uid": "6063bf11-84ef-4ed5-a785-ac44b4328fdc",

"start_time_us": 487241235,

"end_time_us": 508304973,

"num_calibu_frames": 10,

}

],

"CalibrationCorrection": [

{

"status": "SUCCESS",

"message": "",

"session_uid": "01ac9bf2-334a-49c6-9dc6-fdc07ab08a2a",

"generalized_gaze_error_rads": {

"mean": 0.047444001119500284,

"std": 0.015775822542178554,

"min": 0.009264740570696107,

"max": 0.16895371875829926,

"p25": 0.036160872560797655,

"p50": 0.04529629090291307,

"p75": 0.05761677117669144,

"p95": 0.0675233675673802

},

"calibrated_gaze_error_rads": {

"mean": 0.037444001119500284,

"std": 0.005775822542178554,

"min": 0.006364740570696107,

"max": 0.06835371875829926,

"p25": 0.026060872560797655,

"p50": 0.02519329090291307,

"p75": 0.03760677117669144,

"p95": 0.0474232675673802

}

},

{

"status": "FAILURE",

"message": "No calibration available for this session",

"session_uid": "6063bf11-84ef-4ed5-a785-ac44b4328fdc"

} ]

}